Compounding the Performance Improvements of Assembled Techniques in a Convolutional Neural Network

This paper is essentially a summary for a bag of training techniques for training CNN.

Neural Network Model

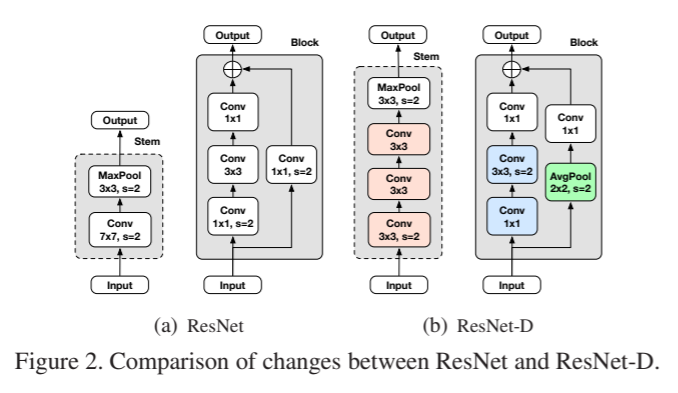

ResNet-D

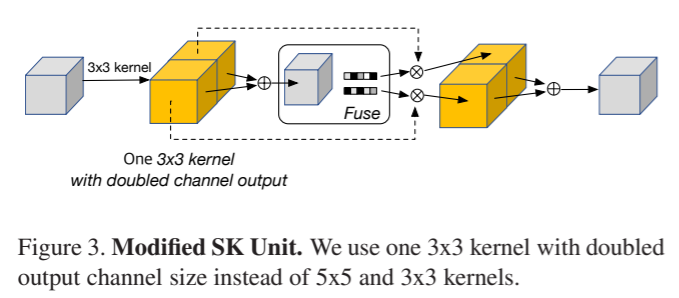

Channel-Attention (SE & SK)

Squeeze and Excitation (SE) module has been introduced by this paper

Selective Kernel can be described in:

A light-weight SK implementation in pytorch can be found here

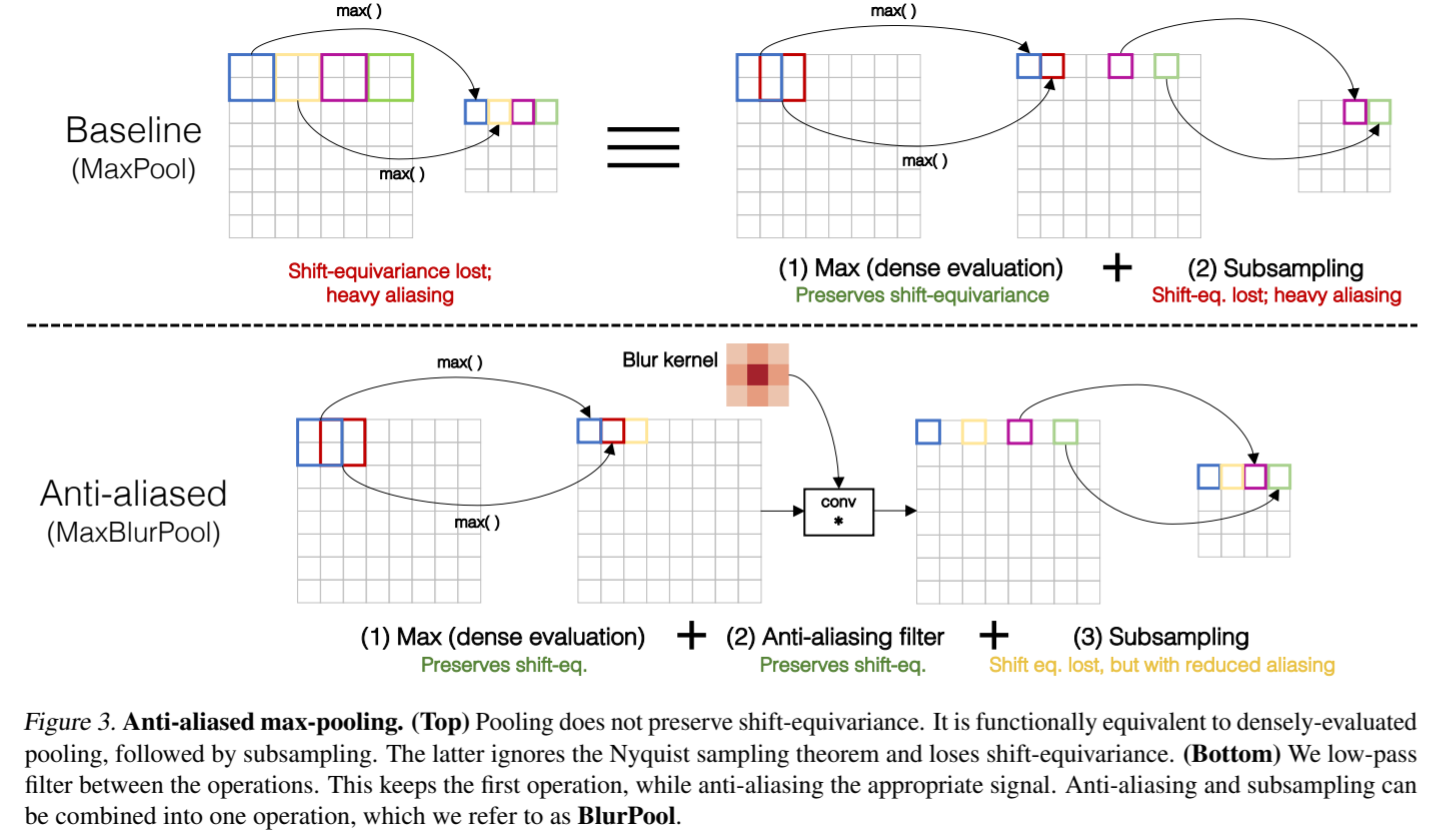

Anti-Alias Downsampling

AA Downsampling is first proposed in this paper.pdf.

This is also called Blur-Pool implemented in keras here

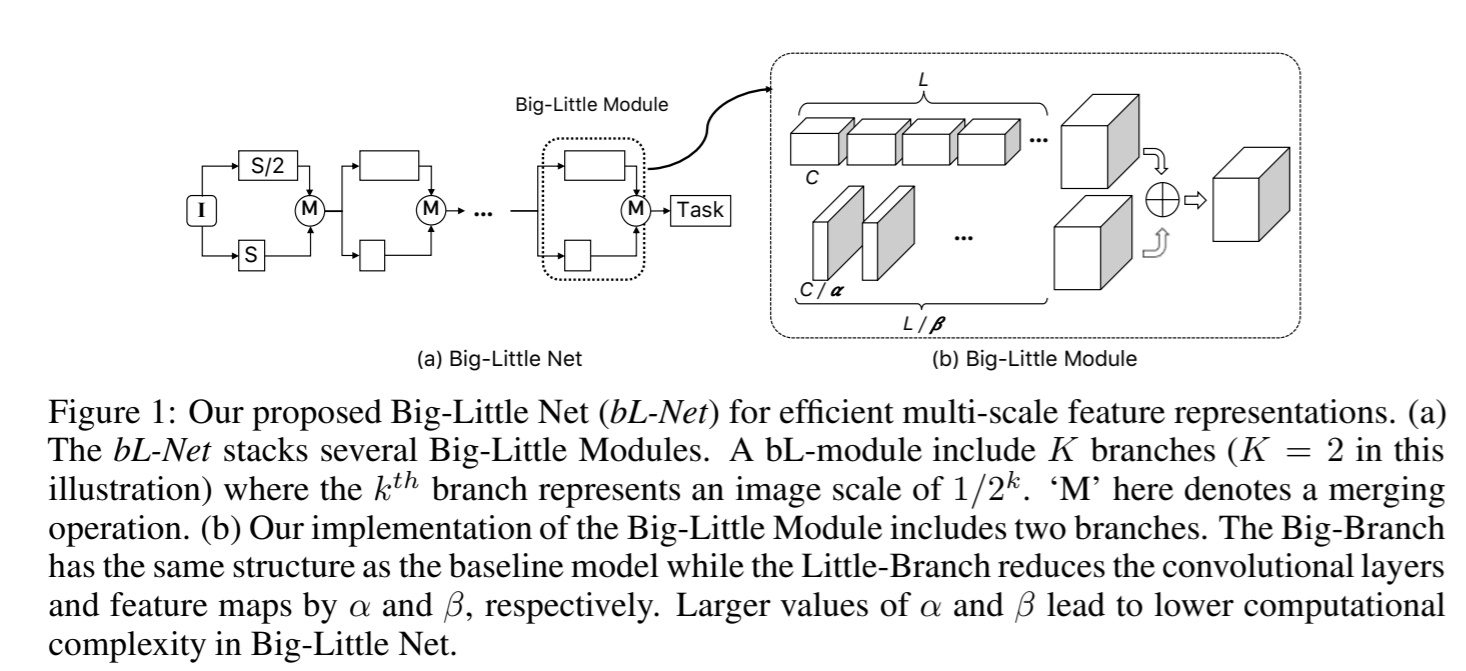

Big Little Network (BL)

Big Little Network is first proposed in This paper.pdf

Regularization

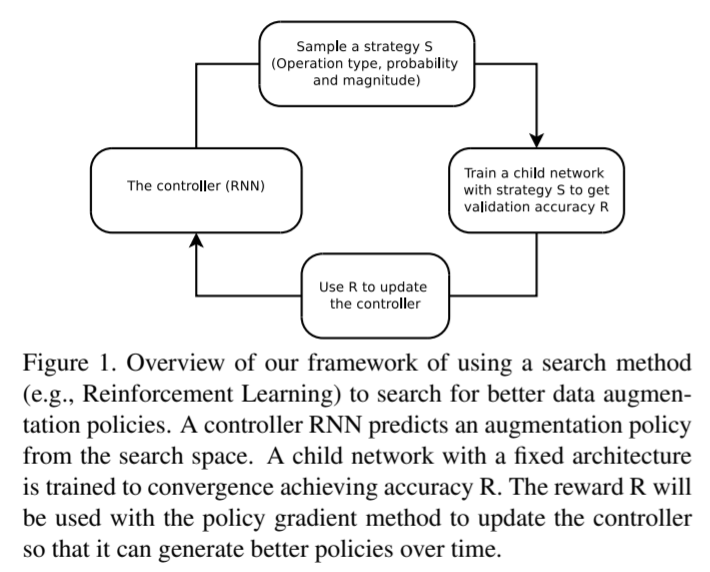

AutoAugment

Auto Augmentation is proposed in This paper.pdf which applies reinforcement learning to train a agent to do image augmentation.

Tensorflow open source code can be found here

Mixup

Mix up has been introduced in Bag of Freebies

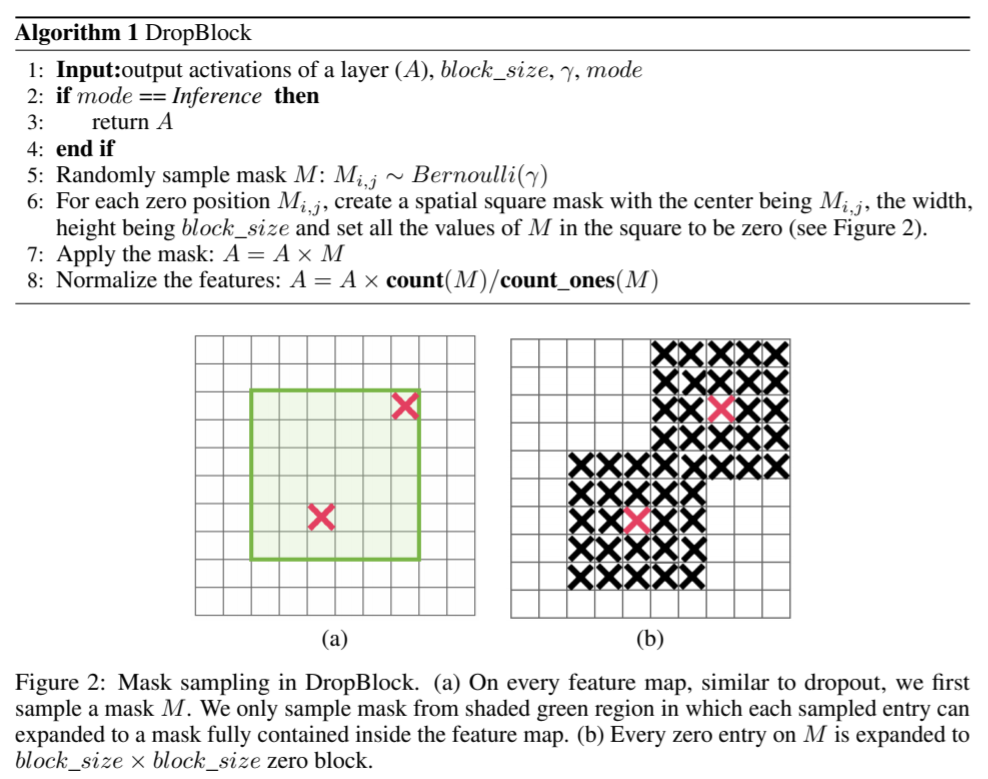

DropBlock

Dropblock is first proposed in This paper.pdf

dropblock has been implemented in Pytorch here

Label Smoothing

label smoothing 源自inception-v3,在训练时有一定概率不采取原label,而均匀随机选择另一个class作为ground truth.

The paper indeed shows that almost all methods induce improvement in ImageNet results and transfer learning result